Pots, fyi

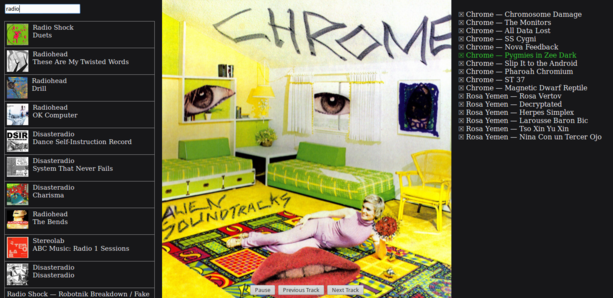

Streaming music webapp

Pots, fyi is a webapp for streaming your music collection. Code and setup instructions are on GitHub.

The idea is to run Pots, fyi’s server component on a home server or VPS with access to your music collection. Then you can listen to music on the go, on a laptop or phone, even if you have hundreds of gigabytes of music, without dragging around a big hard disk.

Unlike commercial music streaming services:

- You don’t have to pay anyone for the privilege of accessing music you own

- There are no ads

- No one’s tracking you

- You won’t lose access to your music if some company changes their business model

- All your music is available, even obscure bands the streaming services have never heard of

It’s built with Flask, Backbone.js and HTML5 audio. Current features include:

- Typeahead search

- Simple authentication through Persona (not in the live demo)

- Autodetects file format support and seamlessly transcodes tracks your browser can’t play

- Tested in Chrome and Firefox; may work in recent Safari

This is a work in progress and far from ready for prime-time. If you try it, please do so with low expectations.

Notes

The rest of this page is devoted to my thoughts while developing the project. For most people, this will be too much information, but I post it publicly in the name of learning and sharing.

Jan 6th, 2013

Today I made some great progress. First I implemented basic search as you type in the existing bare-bones template. Then, once that was working, I created a new HTML template, and simplified the Backbone code by only having one view for the whole search result list.

Search as you type turned out to be pretty simple. I haven’t changed anything on the backend, so for the moment, it only searches the song’s title (not artist, album, etc.) and it’s purely a substring search. All it took was to add a key-up handler to the search box. When a key is released, we check if the search string has changed (it might not have if the key was, say, Shift or Ctrl). If so, we unset the active search timer, if any, and start a timer that will execute a search after 200 ms. The timer calls the exact same function that used to be called when you clicked the Search button.

The reason for using a timer is that, if someone is a fast typist, we don’t need to redo the search with every single keystroke. We just search when they pause for a moment. I tried some different times and 500ms felt very laggy, 350ms only slightly less so. 200ms feels responsive and smooth as butter, so there you have it. Of course this can be tweaked if the resulting server load on my Raspberry Pi is too high from all the queries! Doubt it will be, though, since this is a single-user app.

One problem that frustrated me very much was that the Handlebars template was coming up blank for all the attributes. If there were 6 search results, sure enough, I would get six list items — but they would all be blank! Within the search result list view, I was doing:

resultArray = _(this.collection.models).toArray();

and passing resultArray to the template. What was wrong with the array? Inspected in Firebug, the array seemed to have a bunch of Backbone methods and stuff in it. I wondered why toArray() wasn’t giving me a normal array and did some frantic googling to try to figure it out.

As it turned out, toArray() was giving me a perfectly normal array. But it was an array of Backbone.Model objects! At this point I had two choices. I could map that array through a function like this:

resultArray = _.map(resultArray, function(item) { return item.attributes; });

and get just the attributes. Fortunately there’s no need for that ugly solution, as the Backbone collection object itself has a toJSON() method that does exactly what I need:

resultArray = this.collection.toJSON();

And with that correct version, the problem was happily solved.

Ironically the current iteration can’t actually play music while the last, crappy version, could. But no matter. This is shaping up well.

Jan 27th, 2013

Pots, fyi is now playing music, and has been for a few days. You can search, click a search result to add it to the playlist, and click the first song on the playlist to start it playing.

However, search results come in an arbitrary order, cannot be scrolled past one screenful, and do not show what album they’re from (or any other information beyond artist and title). There is also no way to browse artists or albums in the collection, and no support for album art. There is no transcoding or client-side decoding, so only Ogg Vorbis can be played in Firefox, and FLAC cannot be played in any browser; these formats fail silently.

And, in what is potentially a huge problem, search queries are absurdly slow to return on the Raspberry Pi apparently due to CPU usage within Python. The searches now are very dumb… nothing but artist and title concatenated, and a full token search will be much more sophisticated and span artists, albums and songs.

Jan 28th, 2013

Reading about tries. I think these might be useful for making find as you type in Pots, fyi faster. What if you created a trie with every token found in the database, then sent it, compressed, to the client, and that was used to search on the client side?

Would it be too big? Maybe, but maybe not. My collection of 19,000 songs on the Raspberry Pi all fits in a mere 2.1MB tracks.db file, and that contains the same artist names over and over again, as well as long filenames, with no compression.

A suffix tree might also be a useful data structure to look into. I think Firefox’s token search actually uses a less efficient algorithm, as I get results much slower for typing “irefo” in the Awesome Bar than for “firefo”. At least, this used to be the case: right now on Aurora 20 I’m finding both are pretty fast.

Anyway, I’m concerned that search as you type won’t be responsive enough with round-trips. But on the other hand, Google faces the same problem and still has Instant. In my case the bottleneck is the Raspberry Pi’s CPU coming up with the result, so perhaps I am focusing on the wrong angle of the problem.

Here are some benchmarks. On the RPi, I searched for two terms, a specific one and a very general one that has thousands of matches. I searched both directly through SQLite using its command line program, and via a curl request to Pots, fyi on localhost, going through the SQLAlchemy ORM and serializing the first 30 tracks as JSON.

Approximate results after 3 trials:

time curl -s http://localhost:4004/search?q=p >/dev/null: 6sectime curl -s http://localhost:4004/search?q=iggy+pop >/dev/null: 0.6sectime sqlite3 tracks.db "SELECT * FROM track WHERE artist LIKE \"%p%\";" >/dev/null: 0.6sectime sqlite3 tracks.db "SELECT * FROM track WHERE artist LIKE \"%iggy pop%\";" >/dev/null: 0.4sec

My conclusion is that yes, the ORM adds an unacceptable slowdown… but this is only for the results that actually go through the ORM. In the example, I did something stupid: I asked for all results from the SQL query, but then lopped off the 31st and on from the array. If I limit the SQL query itself to 30 results before executing, then the first listed benchmark goes to about 0.6-0.8sec. So the ORM overhead may be acceptable for this application, where number of results is few (30).

Then again, the raw SQLite times are too long. I think for search as you type to be decently responsive over a network, which introduces its own latency, the production of results needs to be near-instant, at most 100ms.

In Python, I could use a trie library like Marisa-Trie or something related like DAWG. CompletionDAWG looks like it might be the best fit. There are other trie implementations reviewed in this article.

Jan 29th, 2013

I’m evolving towards a better understanding of the bottleneck here. When I did the above benchmarks, that was before adding LIMIT 30 to the SQL queries, and if I do add LIMIT 30, the results with SQLite are quite different.

With the limit, queries that yield many results are now faster than queries that yield few. It seems they find enough results within the first few rows checked to meet the limit, and can return early. If I search for something that doesn’t match any rows, like “xylitol”, that’s clearly a worst case:

$ time sqlite3 tracks.db "SELECT * FROM track WHERE artist LIKE \"%xylitol%\" LIMIT 30;" | wc -l

0

real 0m0.320s

user 0m0.220s

sys 0m0.080s

$ time sqlite3 tracks.db "SELECT * FROM track WHERE artist LIKE \"%p%\" LIMIT 30;" | wc -l

30

real 0m0.047s

user 0m0.000s

sys 0m0.030s

I haven’t found any LIKE query to take more than about 0.3sec with LIMIT 30 tacked onto it.

So direct SQLite requests are taking 40-330ms. What about through SQLAlchemy, the JSON serializer and Gunicorn? Testing as before (but this time going to localhost:8000 instead of 4004 to ignore any influence Nginx’s reverse proxying may have), I get results as low as 380ms (curl http://localhost:8000/search?q=e) and as high as 780ms (curl http://localhost:8000/search?q=salt, a rare word that takes longer to search, but still returns results needing to be serialized and JSONified).

After doing this, I noticed an inconsistency: my SQLite benchmarks were searching only titles, while the benchmark through Pots, fyi, was searching both artist and title. Surprisingly, after modifying the code to search only titles, results seemed to take longer, with almost a full second for “salt”. I’m not sure if this is a fluke or what.

Anyway, to sum up:

For search-as-you-type to work acceptably, I think the underlying queries need to be executed and converted to JSON in less than 100ms. There’s network latency on top of that, so I think <100ms is the minimum acceptable performance. After limiting the SQL query to 30 results it’s down from >6sec(!) for some queries, to a range of about 380-780ms but this still adds a ridiculous degree of latency. I think the first step is to not use SQLAlchemy’s ORM but execute the SQL query directly; SQLite itself returns results in 40-330ms. The second might be to add an index or otherwise tune SQLite.

Benchmark, with SQLite CLI’s “.timer on”:

sqlite> SELECT track.artist, track.title, album.title FROM track, album \

WHERE track.artist || " " || track.title LIKE '%unseen%' AND \

track.album_id==album.id LIMIT 30;

[15 results]

CPU Time: user 0.300000 sys 0.000000

Jan 30th, 2013

Found a fun little problem with ID3 tags. Several of my albums have correct year tags when viewed with EyeD3, but nonsensical year tags from the perspective of Mutagen: 2704, 2110, 2404, and 2811 to name a few.

When I open the files with Mutagen’s ID3() module in a Python REPL, the incorrect dates appear to be coming from a tag called TDRC. Strangely, the correct date for each album (e.g. 2008) doesn’t appear anywhere in the tag that Mutagen reads.

So, somehow — perhaps not through Mutagen, which seems to lack complete API documentation in any case — I need to read the same year that EyeD3 is reading and overwrite the TDRC tag with that (or simply remove the TDRC tag).

How very annoying. ID3 and its multiple, often ambiguous ways of representing similar information, far too often result in situations like this where different programs read different information out of the same tag.

Feb 3rd, 2013

Got transcode working today. Lots of stuff going on in that code, but I thought I’d just write what one of the bigger problems was (which I think I’ve fixed).

That is, when a track isn’t finished transcoding and transferring, but the user skips to the next track, it is essential, especially on the slow Raspberry Pi, to cancel the transcode task for the skipped track! Otherwise, the user skips to track 10 and now you have 10 different instances of avconv running at the same time and there’s no way it will keep up given the RPi can’t even encode Vorbis at 2x realtime, let alone 10x.

To get the proper behavior I had to write my own PipeWrapper class instead of using Flask’s FileWrapper to send the output of the avconv pipe. The only difference is that PipeWrapper terminates the underlying process after closing its stdout.

Even this wasn’t enough because Firefox still kept downloading an audio source after the page content was refreshed to remove the <audio> tag. So to fix that I had to pause the track, then set its source attribute to the empty string, a trick I learned from MDN.

However, running with gunicorn, there was an even worse problem. Simply listening to a song would fail after 30 seconds or so causing Firefox to prematurely skip to the next song. Worse, on the server the transcoding process for the now-skipped song kept going! So a pattern developed of skipping from one song to the next until eventually there would be as many avconv processes as songs in the queue, none playing for more than a few seconds.

This was due to gunicorn’s default worker timeout of 30 seconds. I’ve worked around this by setting the worker timeout to 10,000 seconds, though I don’t know if this may open up the possibility of Slowloris style DoS attacks. Something to consider later, perhaps, but for now it works.

Mar 9th, 2013

Though I haven’t been working on Pots, fyi for over a month, I still use it to listen to music. And now, looking at Twitter’s Typeahead.js project, I’m thinking: Why not do typeahead search entirely on the client side?

My database from one of my installations has over 19,000 songs and 2600 albums. Dumping all rows from the “track” and “album” tables, excluding file names, then gzipping the result, yields a file of 357KB. That’s equivalent to 15 seconds of audio at 192kbps, so certainly small enough to send over the web. The Raspberry Pi’s CPU would then be freed up, and network latency would be irrelevant.

However, unless there’s a server-side fallback, there would be a lag between first starting the app and typeahead search working, which sucks. Also the data could get stale if, in the future, tracks are added to the DB while a session is active; the new music you just downloaded might not be showing up. Finally, if a user has an order of magnitude more music than me, which isn’t inconceivable, the resulting 3.5MB data file would probably be impractically large.

Adding authentication (Mar 20th, 2013)

Today I added authentication using Mozilla’s Persona and the Flask plugin for it, Flask-BrowserID.

In the current setup, you can set an ADMIN_EMAIL environment variable to control access, and then only that email (through Persona) can access the site. If ADMIN_EMAIL is not set (the default), then anyone who logs in with Persona using any email address is allowed in.

Persona is pretty slick. I dug how easy it was to get going.

Mozilla’s .js include for Persona conflicted with my application code for unknown reasons, but probably having something to do with Require.js and jQuery. I managed to get it to work by loading jQuery directly from the HTML files and removing it from my Require config.

Also, the default JS supplied by Flask-BrowserID gives very user-unfriendly error messages, popping up an alert that says only “login failure: error”. It looks like I’ll have to replace Flask-BrowserID’s JavaScript code with my own to improve this.

Access control only applies to the dynamic endpoints, so if a direct URL to an .mp3 or album cover .jpg ever gets passed around in public, it’s wide open. I’m not sure how to fix this other than passing all static files through Flask. But then, all transcoded files pass through Flask already, so I guess I might as well.

The login screen and the logout button are ugly. For now, not too concerned with that. I think I will redesign the app entirely at some point soon.

Switching to Browserify (Mar 31st, 2013)

I switched the client-side code from RequireJS to Browserify, resulting in a massive commit.

“Showing 26 changed files with 615 additions and 17,426 deletions.”

Sadly this makes the history useless for all the client-side code… every line was changed (with Browserify you don’t have to wrap your whole file in a function, so it’s all been unindented). Hopefully worth it though. RequireJS always rubbed me the wrong way. Lots of boilerplate and complexity, and rather poorly organized documentation. After reading Esa-Matti Suuronen’s blog post on moving to Browserify, I was sold.

So now most of the dependencies are handled through npm: everything except jQuery and the LocalStorage adapter for Backbone. The client-side JS is served in a one-file bundle, which you update by typing make. It works great with TJ Holowaychuk’s watch; I plan to write a short blog post on the combination. [Edit: the blog is here.]

In the coming days I’ll be doing some further refactoring to clean up the dependencies between models and views.

Refactoring (Apr 2nd, 2013)

This morning I finished a refactor of the client-side code. Each model and view is in its own file now, with its dependencies explicitly required at the top of the file. There are still a couple global references to the PlayingSong model (from within the Playlist model) and to the Playlist model (from the PlayingSongView and PlaylistItemView). I’ve handled these by attaching the PlayingSong and Playlist models to the window object.

It isn’t a perfect solution, but it’s the best I could think up for now. In theory the stuff the global references are doing:

models/Playlist.js: window.playingSong.changeSong(null);

models/Playlist.js: window.playingSong.changeSong(newSong);

views/PlayingSongView.js: if (!window.playlist.nextSong()) {

views/PlayingSongView.js: window.playlist.prevSong();

views/PlaylistItemView.js: window.playlist.seekToSong(this.model.cid);

views/PlaylistItemView.js: window.playlist.removeSong(this.model);

…should perhaps all be in a central controller, listening to events on these models/views. But to do that would add complexity, listening to events in one place only to trigger different events and catch them elsewhere. A trade-off not necessarily worth making.

React conversion (Jan 6th, 2014)

Since the last log entry, I:

- Introduced some backend tests, with help from Brady Ouren

- Fixed the login page randomly showing a background image it wasn’t meant to have

- Added drag and drop playlist reordering

- Added a status indicator to the DB update command

- Fixed deleted albums sticking around after a DB update

- Added a NO_LOGIN option for local, offline use and development

None of that is very exciting, and the nature of these changes — small fixes and tweaks — goes to show what a back-burner project Pots, fyi has been since April. My focus has been elsewhere.

But I want to get serious about it, adding the obvious missing features (like Pause, a seek bar, playlists you can edit) and redesigning the UI so it doesn’t suck.

I’m trying out something I hope will make UI experiments easier: switching to React for the views. In the process, I want to adopt some of the non-React-specific practices from React developer Pete Hunt’s react-browserify-template. Instead of my hacky approach using a Makefile, Pete’s example relies on the purpose-made watchify command to automatically rebuild my JavaScript bundle when a source file changes.

This is a little complicated, though, because I have two JavaScript “bundles”, one of which is just a copy of login.js, served on the login page. Why do I have a separate JavaScript file for the login page? Well, partly as an optimization, but mainly to fix the login page bug I describe above. The bug happened because the login page is meant to be totally static, but was executing some Backbone init code. If I’m careful, maybe I can work around this by having the JavaScript bundle figure out if it’s running on the login page or the main application page, and only have one bundle. The optimization is hardly necessary.

So the way Persona works has changed. Instead of passing callbacks to navigator.id.get and navigator.id.logout, I’m supposed to pass them in advance to navigator.id.watch, like this:

navigator.id.watch({

onlogin: exports.gotAssertion,

onlogout: exports.logoutCallback

});

and then call request() and logout() without arguments. Unfortunately, this doesn’t work with how my login and logout callbacks are structured. Because when you use watch(), as soon as Persona loads and initializes, it decides whether it believes the user is logged in or not, and calls your login or logout callback, respectively. This is before any action is taken! Since both my login and logout callbacks reload the page, the result is the login page reloading over and over.

Since I’m working on a different issue right now (doing away with the separate bundle for login), I’ll worry about updating my Persona code later and for now continue to use the deprecated callback arguments.

Hmm, watchify immediately quits if I use the -v option. Bug? Maybe buggy interaction with hbsfy or something? If it always does that, seems obvious enough for the watchify maintainers not to miss it (and no issues on GitHub about it). For now, I’ve elided the -v from my options.

I reactified the SearchResultListView only, and now I’m reactifying the whole app using a new AppView. And I just realized something annoying about React: unless I’m mistaken, you have to have an arbitrary extra layer of HTML at your root component.

That is, I need an empty container for all the React stuff to go into as React will replace the contents (I think):

<div id="app-container">

</div>

But then when my AppView’s render method is called, it can only return one top-level element. (“It should… return a single child component.”) So inside the app-container div, I’ll have an app div, serving no semantic purpose. That’s annoying.

Maybe I am misunderstanding — if renderComponent doesn’t replace the container passed, only inserts the dynamic stuff at the beginning, then this would be avoidable. But it doesn’t seem to do that. Hm.

For now, I will just have the redundant app-container and app divs, and look into this later.

At first, my React view rewrite won’t have drag to sort. Later, I’ll implement it using this gist by Pete Hunt as a guide.

Hey, is it not necessary to manually do .bind(this) on React class methods when passing them to components in JSX? The sortable example doesn’t bind manually and seems to work fine. Maybe react.createClass automatically binds all methods to the instance?

My suspicions are correct! I hadn’t noticed this warning in the console:

'bind(): You are binding a component method to the component. ' +

'React does this for you automatically in a high-performance ' +

'way, so you can safely remove this call. See ' + componentName

Sortable list in React (Jan 7th, 2014)

So I found an easier way to do sortable than Pete Hunt’s example. In the Sortable ‘stop’ callback, I call $(rootNode).sortable('cancel') to prevent jQuery UI from actually moving the object. The DOM remains undisturbed, and React is happy. Then I call the reorder method on my model, which triggers React to render the list with the reorder taken into account.